Can Spirit Fruits Prove AI is not really Conscious?

in Thoughts on Philosophy

When conversing with AI, an interesting pattern emerges. Common questions and topics from everyday conversation are easily understood and responded to. However, when faced with a question or sentence so absurd and unique that an AI has likely never seen it, AI tends to fail to respond in a “human way”. Yet for us humans, we may momentarily be taken aback by such a question or statement, but usually, we can interpret and give some meaning to the meaningless.

So, why does AI fail to answer abstract questions? And how on earth does this prove AI is not conscious, or at least actually intelligent?

Table of Contents

What is your Spirit Fruit?

I know, this sounds like an odd question. It’s a playful take on the classic “what’s your spirit animal” question. It’s pretty straightforward and uncomplicated. Yet this exact question proves that AI is not conscious nor intelligent.

Let me back up for a moment. I am currently taking a “Philosophy of Mind” course over the summer. As a part of the course, there are weekly discussion posts that often ask students to take part in a thought experiment and report back their results.

One of these thought experiments was to find a chatbot online and talk to it. We then had to report back the following observations:

- What during the conversation reminds you that you are speaking with a computer rather than a human being?

- What would have to be different for you to believe the responses came from a human?

- What functions of the human mind does it fail to fulfill?

So, I booted up my computer, browsed on over to google, clicked on the first chatbot I could find (CleverBot), and asked it: what’s your spirit fruit?

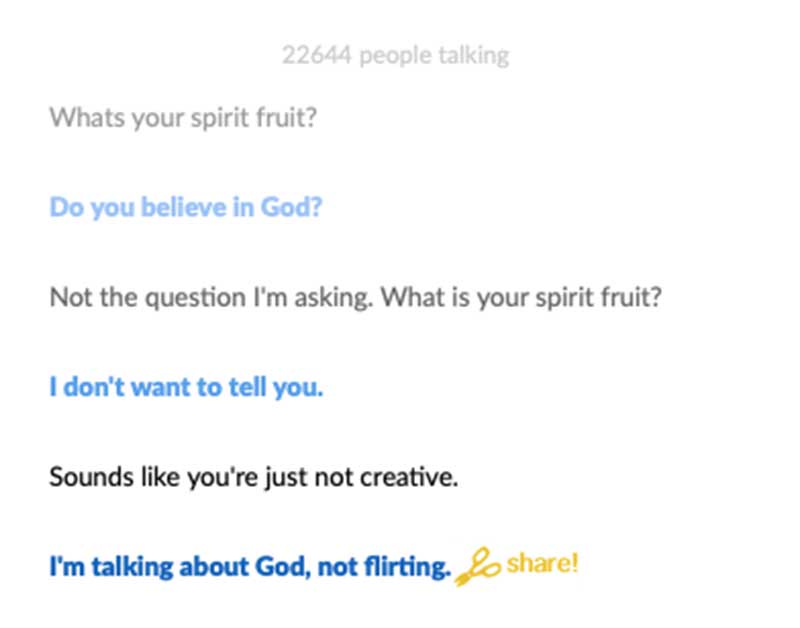

Aaaaaand… the chatbot failed immediately to answer the question. It’s first response was to ask if I believed in God. So, I asked the question again. This time the bot decided to ask if I was attempting to flirt with it (a valid concern). For a third time I asked the bot to answer my question. Instead it tried to ask what my name was. I refuse to give my name to something that refuses to answer my questions (to be fair, I did attempt to negotiate with the bot, exchanging my name for an answer to my question).

Cleverbot not being so clever.

Cleverbot not being so clever.

The “Human Test”

What fails the so called “human test”, or what reminds me that this bot is a computer and not a human, is the inability to answer abstract questions with no meaning. Let me explain.

The question of what is someone’s spirit fruit has no significance. We have no scientific concept of what a spirit fruit is, or how it relates to someones psychology. We can’t look at a person’s behaviors and say “oh she’s such a strawberry” or “my friend is a total pineapple just look how awkward he is”. There is no significance to the question. The only significance is one we give to the answer.

When given something with no true meaning, or a concept so abstract that it is likely the first time a bot has ever seen it, a bot always appears to fail to me. So how exactly does this relate to consciousness? The Causal-Theoretical Functionalism Theory of mind seems to offer a good explanation as to why an inability to pick a spirit fruit entails a lack of consciousness. It also provides an interesting take on what it means to be intelligent.

Causal-Theoretical Functionalism

In lecture 2.4, Dr. Watson mentions how this theory is more Holistic. That is, it takes a comprehensive view of everything we are asking, and then uses that to make meaning out of the components it doesn’t understand. As humans, when first prompted with the question “what is your spirit fruit?”, we likely don’t have an actual answer. We don’t know what the question means. It’s so abstract and new, it’s highly unlikely any of us have seen this question before. We likely have however, seen a similar question: “what is your spirit animal?” Now, according to the lecture, we learn language by removing the word we don’t understand, and filling in the blank with the rest of the content.

In the case of “what is your spirit fruit”, the word fruit is what we don’t understand. However, our brain removes the word fruit, and can replace it with animal, forming a question we have likely seen before and can answer. With this context, something inside our brain clicks, and we can now interpret an answer based off this other question.

On the other hand, a chatbot, according to my understanding, must break apart the word and look up its internal meaning for each word. It likely sees no relevance context for the words fruit and spirit to be together, and fails to successfully answer the question. Given that this bot presumably does not have the ability to take advantage of the Ramsey-Lewis Theory, it fails to answer the question, and defaults to asking an unrelated question in order to change the conversation (lecture 2.4).

Of course, to prove my theory, I would have to ask the bot what its spirit animal is. So I did. And it tried to avoid the question. So I asked again. I told the bot it was just for fun. It told me it was a white mare. I told it I was a gopher. It’s like a wild hamster. It was able to understand this question, presumably because it has seen this question since it is rather popular in our culture.

I personally find the causal-theoretical functionalism theory to be quite compelling. Hopefully, I understood the lecture correctly and did not misinterpret it. I had a lot of fun with this discussion post this week.

Closing Thoughts

So, if you’ve read this far, I’m curious as to 1) what you thought about my interpretation consciousness and Causal-Theoritical Functionalism if this is a valid example, and 2) what is your spirit fruit?